A week ago on December 1st, Amazon soft-launched SageMaker Studio Lab, a free and simplified version of SageMaker Studio which does not require a credit card or either a AWS or Amazon accountAt the time of publishing, there is a waitlist to get an account. Amazon states it should take one to five business days to get approval. My application was approved the same day.. SageMaker Studio Lab provides a CPU instance with a time limit of hours and a GPU instance with a time limit of four hours. It joins Google Colab, Kaggle, and Paperspace in the free machine and deep learning compute space.

The obvious question becomes, how does SageMaker Studio Lab stack against the competition? And should you start using it?

In this post I will compare and benchmark training neural networks on SageMaker Studio Lab against Google Colab, Colab Pro, and KaggleI have not used Colab Pro+ or Paperspace Gradient, so will not include them in this comparison. using image and NLP classification tasks.

# Comparison with Colab and Kaggle

Like Google Colab and Kaggle, Studio Lab offers both CPU and GPU instances: a T3.xlarge CPU instance with a 12 hour runtime and a G4dn.xlarge GPU instance with a 4 hour runtime. I will limit this comparison to the GPU instances provided by all three services.

GPU Instance Comparison Overview

| Service | Instance | CPU | Generation | RAM | GPU | GPU RAM | Max Scratch | Storage | Max Runtime | Cost |

|---|---|---|---|---|---|---|---|---|---|---|

| Studio Lab | - | 4 CPUs | Cascade Lake | 16GB | Tesla T4 | 15109MiB | - | 15GB | 4 hours | Free |

| Colab | - | 2 CPUs | Varies | 13GB | Tesla K80 | 11441MiB | 60GB | 15GB | 12 hours | Free |

| Colab Pro | Normal | 2 CPUs | Varies | 13GB | Tesla P100 | 16280MiB | 124GB | 15GB | 24 hours | $10/m |

| Colab Pro | High RAM | 4 CPUs | Varies | 26GB | Tesla P100 | 16280MiB | 124GB | 15GB | 24 hours | $10/m |

| Kaggle | - | 2 CPUs | Varies | 13GB | Tesla P100 | 16280MiB | 90GB | 20GB | 9 hours | Free |

See below for clarifications.

Some expansion on the table:

- SageMaker Studio Lab only has persistent storage, but unlike Google Drive, it is fast enough to train from.

- I’ve observed the following CPU generations on Colab and Kaggle: Haswell, Broadwell, Skylake, and Cascade Lake. Anecdotally, most seem to be Haswell or Broadwell.

- Colab scratch disk varies per instance, the table reports my recent maximum observed sizes.

- Colab’s persistent storage is Google Drive’s free allocation.

- Colab Pro could assign a Tesla T4 or Tesla K80. I’ve consistently been assigned Tesla P100s since Tesla V100s were relegated to Pro+. I’ve only been assigned a K80 once.

A T4 has not been assigned to me in months.The day after publishing this post Google assigned me the first T4 in months, but between then and the update, they’ve all been P100s. - The free version of Colab could also assign a Tesla T4 or Tesla P100, but in my very limited recent usage of it I’ve only been assigned K80s.

- The Colab FAQ and Colab Pro/Pro+ FAQ say different things about maximum runtime. I’ve observed the max runtime on the Pro FAQ to be accurate, but others have not.

- Colab Pro can have a pop-up to verify you are at the computer, but once checked it is not shown again.

- Kaggle’s persistent storage is 20GB per notebook. There is also 100GBs per account for private dataset storage.

- Kaggle has a maximum weekly GPU runtime which varies based on total usage, but is around 40 hours per week, plus or minus a few hours.

# SageMaker Studio Lab

Launching a SageMaker Studio Lab results in a lightly modified JupyterLab instance with a few extensions such as Git installed, accented with Studio Lab purple.

In my limited testing, SageMaker Studio Lab’s JupyterLab behaves exactly as a normal installation of JupyterLab does on your own system. It even appears that modifications to JupyterLab and installed python packages persistOne nice feature of persistence means you can install packages and download/pre-process data on a CPU instance, should Amazon demote you to the bottom of a queue due to GPU usage in the future. Currently there appears to be no queue..

For example, I was able to install a python language server and markdown spellchecker from this Jupyterlab Awesome List, although I did not attempt to install any extensions which require NodeJSI’ve heard that installing NodeJS via conda doesn’t work for Jupyter extensions.. This persistence of packages does bring up the question of whether Amazon will update pre-installed packages like PyTorch, or if maintaining an updated environment falls completely on the userI’ve heard anecdotally one instance of a bricked environment where the only solution was to delete the Studio Lab account and reapply for a new account..

It is possible that Amazon delayed destroying my instance or will upgrade the underlying image sometime in the future, removing the custom installed packages and extensions. I will update this post should that occur. But for now, Studio Lab is the most customizable service of the three.

I installed python packages this way and from JupyterLab terminal without issue, although from the FAQ I am not sure if JupyterLab terminal uses the correct installation method or not.

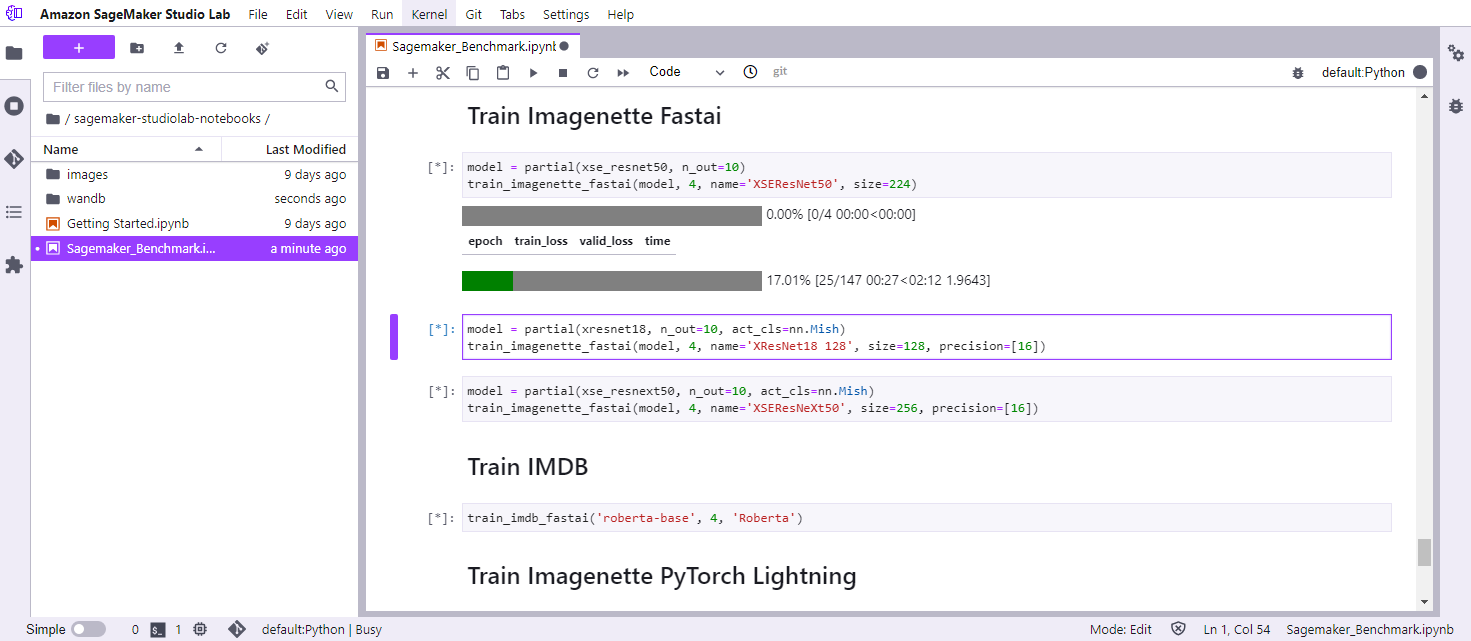

# Benchmarking

Code and raw results are available here. As Kaggle’s GPU instance and Colab Pro’s GPU instance with 2 CPUs are almost, if not the exact same set of machines from GCP, I have elected to only run the benchmark on Colab Pro’s 2 CPU instance and will let those results stand in for Kaggle.

# Datasets and Models

I selected two small datasets for benchmarking SageMaker Studio Lab against Colab: Imagenette for computer vision and IMDB from Hugging Face for NLP. To reduce training times, I randomly sample twenty percent of the IMDB training and test set.

For computer vision, I selected XResNet and XSE-ResNet—fastai versions of ResNetKaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). with the architectural improvements from the Bag of TricksTong He, Zhi Zhang, Hang Zhang, Zhongyue Zhang, Junyuan Xie, and Mu Li. 2019. Bag of Tricks for Image Classification with Convolutional Neural Networks. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 558–567. DOI:10.1109/CVPR.2019.00065 paper and squeeze and excitationJie Hu, Li Shen, Samuel Albanie, Gang Sun, and Enhua Wu. 2020. Squeeze-and-Excitation Networks. IEEE Transactions on Pattern Analysis and Machine Intelligence 42, 8 (2020), 2011–2023. DOI:10.1109/TPAMI.2019.2913372 (for the latter).

For NLP, I used the Hugging Face implementation of RoBERTaYinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. 2019. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv:1907.11692..

# Training Setup

I trained Imagenette using fast.ai with random resized crop and random horizontal flip from fast.ai’s augmentations.

To train IMDB, I used fast.ai and Hugging Face Transformers via blurr. In addition to adding Transformers training and inference support to fast.ai, blurr integrates per batch tokenization and fast.ai’s text dataloader which randomly sorts the dataset based on sequence length to minimize padding while training.

I trained XSE-ResNet50 and RoBERTa base using both single and mixed precision. XSE-ResNet50 was trained at an image size of 224 pixels with a batch size of 64 for mixed and 32 for single precision. RoBERTa was trained at a batch sized of 16 for mixed precision and 8 for single precision.

To simulate a CPU bound task, I also trained a XResNet18 model at an image size of 128 pixels and batch size of 64.

# Results

As one would anticipate from the GPUs technical specs, the Tesla T4 on SageMaker Studio Lab outperforms the Tesla P100 on Google Colab when training in mixed precision, but lags when training full single precision models. I will primarily compare the Colab Pro High RAM P100Shortened to Colab Pro P100. Likewise, I will shorten Colab Pro High RAM Tesla T4 to Colab Pro T4. instance with SageMaker Studio Lab as they are the most similar sans GPU.

The Colab Pro High RAM Tesla T4 instance performs almost identically to SageMaker Studio Lab in the first benchmark, which is no surprise as they both have a Tesla T4. But in later benchmarks Studio Lab is statistically faster than Colab Pro T4 in some GPU actions, despite being the same hardware. Perhaps this is just luck of the draw.

Either way, Colab Pro T4 remains significantly faster than Colab Pro P100, so it is unfortunate that Google seems to rarely assign the fasterAnd cheaper. A Tesla T4 costs 1.46/hr per GPU. Prices can vary per GCP region. mixed precision GPU and prefers Tesla P100 instances.

# XSE-ResNet50

Colab Pro P100 with SageMaker Studio Lab, XSE-ResNet50 trains 17.4% faster overall on Studio Lab. When looking at just the training loop, which is the batch plus draw action, Studio Lab is 19.6 percent faster than Colab Pro P100. Studio Lab is faster in all actions with one notable exception: the backwards pass where Studio Lab is 10.4% slower then Colab Pro P100.

XSE-ResNet50 Mixed Precision Imagenette Simple Profiler Results

| Mean Duration | Duration Std Dev | Total Time | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Phase | Action | Colab Pro | Pro P100 | Pro T4 | Studio Lab | Colab Pro | Pro P100 | Pro T4 | Studio Lab | Colab Pro | Pro P100 | Pro T4 | Studio Lab |

| fit | fit | 689.1 s | 673.5 s | 559.8 s | 556.4 s | ||||||||

| epoch | 172.2 s | 168.3 s | 139.9 s | 139.0 s | 3.227 s | 2.418 s | 3.826 s | 1.708 s | 688.7 s | 673.1 s | 559.5 s | 556.1 s | |

| train | 147.5 s | 149.3 s | 123.6 s | 124.3 s | 2.045 s | 1.972 s | 3.145 s | 1.390 s | 590.2 s | 597.4 s | 494.3 s | 497.4 s | |

| validate | 24.62 s | 18.93 s | 16.30 s | 14.66 s | 1.198 s | 446.4ms | 682.8ms | 386.6ms | 98.48 s | 75.72 s | 65.18 s | 58.64 s | |

| train | batch | 990.2ms | 1.006 s | 805.1ms | 809.8ms | 217.5ms | 231.6ms | 355.4ms | 307.8ms | 582.3 s | 591.6 s | 473.4 s | 476.1 s |

| step | 612.3ms | 627.8ms | 460.9ms | 423.3ms | 23.88ms | 23.83ms | 19.84ms | 18.55ms | 360.1 s | 369.1 s | 271.0 s | 248.9 s | |

| backward | 309.1ms | 307.3ms | 279.8ms | 339.3ms | 129.6ms | 130.2ms | 207.7ms | 188.5ms | 181.7 s | 180.7 s | 164.5 s | 199.5 s | |

| pred | 50.30ms | 52.64ms | 46.47ms | 33.54ms | 80.19ms | 82.73ms | 124.9ms | 106.8ms | 29.57 s | 30.95 s | 27.33 s | 19.72 s | |

| draw | 14.86ms | 14.64ms | 14.42ms | 10.70ms | 52.75ms | 71.08ms | 69.03ms | 49.37ms | 8.738 s | 8.608 s | 8.482 s | 6.290 s | |

| zero grad | 2.087ms | 2.484ms | 2.324ms | 1.899ms | 330.6µs | 351.4µs | 476.0µs | 72.35µs | 1.227 s | 1.461 s | 1.367 s | 1.117 s | |

| loss | 1.412ms | 1.191ms | 1.077ms | 998.7µs | 156.5µs | 127.6µs | 296.7µs | 173.5µs | 830.2ms | 700.2ms | 633.6ms | 587.2ms | |

| valid | batch | 184.8ms | 82.08ms | 75.39ms | 54.24ms | 142.3ms | 138.7ms | 148.9ms | 119.6ms | 45.83 s | 20.36 s | 18.70 s | 13.45 s |

| pred | 111.0ms | 55.52ms | 49.08ms | 35.02ms | 122.1ms | 66.76ms | 90.63ms | 84.52ms | 27.53 s | 13.77 s | 12.17 s | 8.686 s | |

| draw | 71.74ms | 25.09ms | 25.01ms | 18.04ms | 71.54ms | 116.1ms | 114.2ms | 82.44ms | 17.79 s | 6.221 s | 6.201 s | 4.475 s | |

| loss | 1.642ms | 1.253ms | 1.130ms | 990.5µs | 1.967ms | 411.8µs | 795.7µs | 1.323ms | 407.1ms | 310.8ms | 280.2ms | 245.7ms | |

Kaggle results should be equivalent to Colab Pro, which is the normal P100 instance. Pro P100 is the Colab Pro High RAM P100 instance. Pro T4 is the Colab Pro High RAM T4 instance. Results measured with Simple Profiler Callback.

When training XSE-ResNet50 in single precision, the results flip with Studio Lab performing 95.9 percent slower then Colab Pro P100. The training loop is 93.8 percent slower then Colab Pro P100, although this is solely due to the backward pass and optimizer step where Studio Lab is 105 percent slower then Colab Pro P100 while 41.1 percent faster during all other actions.

XSE-ResNet50 Single Precision Imagenette Simple Profiler Results

| Mean Duration | Duration Std Dev | Total Time | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Phase | Action | Colab Pro | Pro P100 | Pro T4 | Studio Lab | Colab Pro | Pro P100 | Pro T4 | Studio Lab | Colab Pro | Pro P100 | Pro T4 | Studio Lab |

| fit | fit | 663.4 s | 645.7 s | 1.391ks | 1.265ks | ||||||||

| epoch | 165.8 s | 161.3 s | 347.6 s | 316.3 s | 2.120 s | 2.188 s | 3.918 s | 9.994 s | 663.1 s | 645.3 s | 1.390ks | 1.265ks | |

| train | 142.7 s | 142.7 s | 309.1 s | 282.7 s | 1.657 s | 1.710 s | 3.423 s | 8.503 s | 570.8 s | 571.0 s | 1.236ks | 1.131ks | |

| validate | 23.07 s | 18.57 s | 38.49 s | 33.61 s | 465.2ms | 477.5ms | 497.5ms | 1.580 s | 92.30 s | 74.29 s | 153.9 s | 134.4 s | |

| train | batch | 953.3ms | 961.1ms | 2.061 s | 1.881 s | 190.7ms | 205.3ms | 293.3ms | 291.1ms | 560.5 s | 565.1 s | 1.212ks | 1.106ks |

| step | 626.6ms | 634.0ms | 1.437 s | 1.222 s | 24.29ms | 24.00ms | 59.45ms | 68.18ms | 368.4 s | 372.8 s | 844.9 s | 718.3 s | |

| backward | 262.4ms | 260.3ms | 556.0ms | 615.1ms | 116.9ms | 117.3ms | 190.2ms | 188.3ms | 154.3 s | 153.1 s | 326.9 s | 361.7 s | |

| pred | 45.87ms | 48.43ms | 50.19ms | 30.54ms | 66.68ms | 68.29ms | 120.5ms | 116.9ms | 26.97 s | 28.48 s | 29.51 s | 17.96 s | |

| draw | 14.84ms | 14.58ms | 14.41ms | 10.75ms | 51.51ms | 70.71ms | 69.64ms | 49.31ms | 8.727 s | 8.573 s | 8.471 s | 6.324 s | |

| zero grad | 2.153ms | 2.519ms | 2.418ms | 2.042ms | 362.9µs | 375.2µs | 478.3µs | 74.03µs | 1.266 s | 1.481 s | 1.422 s | 1.200 s | |

| loss | 1.298ms | 1.109ms | 979.8µs | 914.1µs | 343.9µs | 127.9µs | 123.6µs | 156.4µs | 763.1ms | 652.2ms | 576.1ms | 537.5ms | |

| valid | batch | 162.4ms | 80.50ms | 68.12ms | 44.00ms | 130.5ms | 146.1ms | 147.7ms | 130.0ms | 40.26 s | 19.96 s | 16.89 s | 10.91 s |

| pred | 92.53ms | 53.83ms | 43.95ms | 26.26ms | 107.5ms | 74.66ms | 79.61ms | 95.61ms | 22.95 s | 13.35 s | 10.90 s | 6.513 s | |

| draw | 67.73ms | 25.21ms | 23.35ms | 17.12ms | 73.53ms | 117.0ms | 116.4ms | 83.93ms | 16.80 s | 6.251 s | 5.791 s | 4.245 s | |

| loss | 1.758ms | 1.231ms | 702.6µs | 540.3µs | 2.174ms | 1.460ms | 318.8µs | 198.8µs | 435.9ms | 305.2ms | 174.2ms | 134.0ms | |

Kaggle results should be equivalent to Colab Pro, which is the normal P100 instance. Pro P100 is the Colab Pro High RAM P100 instance. Pro T4 is the Colab Pro High RAM T4 instance. Results measured with Simple Profiler Callback.

# RoBERTa

Training RoBERTa in mixed precision Studio Lab pulls further ahead of Colab Pro P100, performing 29.1 percent faster. Studio Lab is 32.1 percent faster than Colab Pro P100 during the training loop and is faster in all actions except for the calculating the loss where Studio Lab is 66.7 percent slower.

RoBERTa Mixed Precision Benchmark Results

| Mean Duration | Duration Std Dev | Total Time | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Phase | Action | Colab Pro | Pro P100 | Pro T4 | Studio Lab | Colab Pro | Pro P100 | Pro T4 | Studio Lab | Colab Pro | Pro P100 | Pro T4 | SageMaker |

| fit | fit | 828.9 s | 842.3 s | 647.7 s | 596.8 s | ||||||||

| epoch | 207.2 s | 210.6 s | 161.9 s | 149.2 s | 151.0ms | 62.80ms | 2.517 s | 458.8ms | 828.9 s | 842.2 s | 647.7 s | 596.7 s | |

| train | 151.7 s | 154.9 s | 119.7 s | 110.7 s | 131.4ms | 58.04ms | 2.443 s | 329.2ms | 606.9 s | 619.6 s | 478.8 s | 442.9 s | |

| validate | 55.49 s | 55.66 s | 42.22 s | 38.45 s | 35.57ms | 18.38ms | 76.20ms | 508.5ms | 222.0 s | 222.6 s | 168.9 s | 153.8 s | |

| train | batch | 477.3ms | 491.8ms | 362.0ms | 334.6ms | 249.9ms | 252.0ms | 203.6ms | 181.1ms | 595.6 s | 613.8 s | 451.8 s | 417.6 s |

| step | 368.4ms | 368.2ms | 265.0ms | 248.8ms | 298.4ms | 298.0ms | 227.5ms | 205.1ms | 459.8 s | 459.5 s | 330.7 s | 310.5 s | |

| backward | 75.53ms | 88.24ms | 63.36ms | 60.07ms | 70.60ms | 70.00ms | 43.12ms | 38.08ms | 94.27 s | 110.1 s | 79.07 s | 74.97 s | |

| pred | 23.59ms | 25.03ms | 22.55ms | 16.65ms | 2.843ms | 4.153ms | 5.166ms | 3.348ms | 29.44 s | 31.24 s | 28.14 s | 20.78 s | |

| draw | 6.212ms | 6.773ms | 5.802ms | 4.176ms | 12.92ms | 23.15ms | 25.29ms | 122.2µs | 7.752 s | 8.453 s | 7.241 s | 5.212 s | |

| zero grad | 1.813ms | 2.169ms | 3.912ms | 3.615ms | 377.3µs | 370.1µs | 390.6µs | 15.83ms | 2.263 s | 2.706 s | 4.883 s | 4.512 s | |

| loss | 1.519ms | 1.260ms | 1.282ms | 1.222ms | 264.3µs | 161.5µs | 196.1µs | 217.2µs | 1.896 s | 1.573 s | 1.600 s | 1.525 s | |

| valid | batch | 26.10ms | 27.77ms | 23.18ms | 16.62ms | 15.27ms | 26.57ms | 28.78ms | 19.19ms | 32.68 s | 34.76 s | 29.02 s | 20.81 s |

| pred | 18.92ms | 20.31ms | 16.89ms | 12.59ms | 2.346ms | 4.193ms | 4.098ms | 2.944ms | 23.68 s | 25.43 s | 21.15 s | 15.77 s | |

| draw | 5.900ms | 6.347ms | 5.292ms | 3.156ms | 13.46ms | 24.95ms | 26.90ms | 17.10ms | 7.387 s | 7.946 s | 6.625 s | 3.951 s | |

| loss | 1.062ms | 927.2µs | 841.1µs | 754.8µs | 516.1µs | 216.2µs | 195.1µs | 915.0µs | 1.330 s | 1.161 s | 1.053 s | 945.1ms | |

Kaggle results should be equivalent to Colab Pro, which is the normal P100 instance. Pro P100 is the Colab Pro High RAM P100 instance. Pro T4 is the Colab Pro High RAM T4 instance. Results measured with Simple Profiler Callback.

In single precision, the results again flip with Studio Lab training 72.2 percent slower overall then Colab Pro P100. The training loop is 67.9 percent slower then Colab Pro P100. And when training XSE-ResNet50 in single precision, this is due to the backward pass and optimizer step being 83.0 percent slower while Studio Lab is 27.7 percent faster performing all other actions.

RoBERTa Single Precision Benchmark Results

| Mean Duration | Duration Std Dev | Total Time | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Phase | Action | Colab Pro | Pro P100 | Pro T4 | Studio Lab | Colab Pro | Pro P100 | Pro T4 | Studio Lab | Colab Pro | Pro P100 | Pro T4 | Studio Lab |

| fit | fit | 877.0 s | 885.7 s | 1.602ks | 1.525ks | ||||||||

| epoch | 219.2 s | 221.4 s | 400.6 s | 381.1 s | 122.3ms | 124.9ms | 991.9ms | 13.67 s | 877.0 s | 885.6 s | 1.602ks | 1.525ks | |

| train | 163.8 s | 165.7 s | 300.8 s | 287.9 s | 94.80ms | 95.66ms | 347.8ms | 10.89 s | 655.0 s | 662.7 s | 1.203ks | 1.152ks | |

| validate | 55.48 s | 55.72 s | 99.77 s | 93.20 s | 41.20ms | 42.00ms | 660.9ms | 2.794 s | 221.9 s | 222.9 s | 399.1 s | 372.8 s | |

| train | batch | 250.3ms | 258.7ms | 459.6ms | 440.2ms | 123.9ms | 127.5ms | 244.5ms | 232.9ms | 625.9 s | 646.7 s | 1.149ks | 1.101ks |

| step | 114.7ms | 116.2ms | 244.0ms | 236.5ms | 78.62ms | 75.19ms | 308.3ms | 295.3ms | 286.9 s | 290.4 s | 610.0 s | 591.3 s | |

| backward | 108.9ms | 112.4ms | 186.6ms | 181.6ms | 163.2ms | 160.5ms | 120.7ms | 116.5ms | 272.2 s | 280.9 s | 466.6 s | 453.9 s | |

| pred | 17.98ms | 20.64ms | 18.62ms | 13.60ms | 2.377ms | 3.465ms | 3.679ms | 2.383ms | 44.96 s | 51.59 s | 46.55 s | 33.99 s | |

| draw | 5.693ms | 6.211ms | 5.038ms | 4.246ms | 8.306ms | 14.41ms | 17.33ms | 95.83µs | 14.23 s | 15.53 s | 12.59 s | 10.62 s | |

| zero grad | 1.830ms | 2.135ms | 4.091ms | 3.104ms | 350.8µs | 341.4µs | 300.1µs | 9.663ms | 4.575 s | 5.338 s | 10.23 s | 7.760 s | |

| loss | 1.056ms | 1.035ms | 1.094ms | 1.090ms | 297.1µs | 148.4µs | 155.3µs | 156.7µs | 2.640 s | 2.588 s | 2.735 s | 2.725 s | |

| valid | batch | 20.41ms | 22.57ms | 19.22ms | 13.12ms | 9.128ms | 16.53ms | 18.10ms | 11.33ms | 51.03 s | 56.42 s | 48.06 s | 32.80 s |

| pred | 14.11ms | 16.40ms | 13.94ms | 9.745ms | 1.738ms | 3.197ms | 3.018ms | 2.036ms | 35.26 s | 41.01 s | 34.84 s | 24.36 s | |

| draw | 5.390ms | 5.254ms | 4.466ms | 2.710ms | 8.345ms | 15.43ms | 17.35ms | 10.43ms | 13.47 s | 13.13 s | 11.16 s | 6.774 s | |

| loss | 751.9µs | 760.2µs | 693.6µs | 564.0µs | 203.2µs | 250.4µs | 157.6µs | 235.0µs | 1.880 s | 1.900 s | 1.734 s | 1.410 s | |

Kaggle results should be equivalent to Colab Pro, which is the normal P100 instance. Pro P100 is the Colab Pro High RAM P100 instance. Pro T4 is the Colab Pro High RAM T4 instance. Results measured with Simple Profiler Callback.

Oddly, the Colab Pro High RAM P100 instance trained slower than the normal Colab Pro instance, despite more CPU cores and CPU RAM and the same GPU. However, it was not a large difference and probably not significant or repeatable.

# XResNet18

For this benchmark, it’s important to know what the draw action is measuring. It’s the time from before drawing a batch from the dataloader to before starting the batch actionWhich includes the forward & backward pass, loss, and optimizer step & zero grad actions.. The dataloader is set to the default prefetch_factor value of two, which means each worker attempts to load two batches in advance before the training loop calls for them.

The lower the draw action is, the better the instance’s CPU is able to keep up with demand.

Here the results are as expected, more CPU cores means a lower draw time and newer CPUs outperform older CPUs at the same core count during validation. However, I should note that excluding the two CPU instance, the only statistically significant validation draw differenceAccording to the t-test. was between Colab Pro P100 and Studio Lab. Which makes sense as Colab CPU generations vary and so far Studio Lab’s has been consistent.

XResNet18 Benchmark Results

| Mean Duration | Duration Std Dev | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Phase | Action | Colab Pro | Pro P100 | Pro T4 | Studio Lab | Colab Pro | Pro P100 | Pro T4 | Studio Lab |

| fit | epoch | 51.13 s | 32.42 s | 28.58 s | 24.27 s | 170.1ms | 146.2ms | 403.5ms | 266.9ms |

| train | 35.69 s | 22.65 s | 20.01 s | 16.91 s | 52.29ms | 148.3ms | 210.7ms | 216.9ms | |

| validate | 15.43 s | 9.766 s | 8.566 s | 7.366 s | 129.2ms | 60.54ms | 206.7ms | 59.39ms | |

| train | batch | 230.6ms | 140.8ms | 124.0ms | 102.7ms | 144.8ms | 100.2ms | 102.3ms | 89.08ms |

| draw | 146.8ms | 35.22ms | 34.64ms | 34.08ms | 135.8ms | 78.72ms | 76.97ms | 66.18ms | |

| valid | batch | 238.7ms | 147.2ms | 125.6ms | 105.6ms | 165.5ms | 184.2ms | 160.5ms | 150.6ms |

| draw | 215.2ms | 117.9ms | 101.3ms | 90.18ms | 165.0ms | 182.9ms | 158.6ms | 151.5ms | |

Kaggle results should be equivalent to Colab Pro, which is the normal P100 instance. Pro P100 is the Colab Pro High RAM P100 instance. Pro T4 is the Colab Pro High RAM T4 instance. Results measured with Simple Profiler Callback.

# Colab Tesla K80

Since the free Colab instance’s Tesla K80 has one-fourth less RAM then all the other GPUs, I reduced the mixed precision batch size by one-fourth also, too 48 and 12 for Imagenette and IMDB, respectively. This isn’t a direct comparison in performance, but rather a real-world comparison that users would see. I did not run any single precision tests.

I ran the Imagenette benchmark for two epochs and reduced the IMDB dataset from twenty percent sample to a ten percent sample and reduced the training length to one epoch.

The Colab K80 took roughly double the time then all the Colab Pro instances to train on half the number of Imagenette epochs. And equivalent IMDB training would have taken over three times longer on the Colab K80 verses the Colab P100.

If possible, one should stay away from training using a K80 on anything other then small models.

XResNet & RoBERTa Colab K80 Benchmark Results

| XResNet50 | Duration | RoBERTa | Duration | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Phase | Action | Mean | Std Dev | Total Time | Phase | Action | Mean | Std Dev | Total Time |

| fit | fit | 1.330ks | fit | fit | 626.3 s | ||||

| epoch | 664.9 s | 9.262 s | 1.330ks | epoch | 626.3 s | 626.3 s | |||

| train | 593.1 s | 7.196 s | 1.186ks | train | 465.6 s | 465.6 s | |||

| validate | 71.71 s | 2.065 s | 143.4 s | validate | 160.6 s | 160.6 s | |||

| train | batch | 2.959 s | 698.7ms | 1.166ks | train | batch | 2.201 s | 1.030 s | 457.8 s |

| step | 1.685 s | 80.89ms | 663.9 s | backward | 1.503 s | 793.6ms | 312.5 s | ||

| backward | 1.155 s | 487.7ms | 455.0 s | pred | 554.2ms | 271.0ms | 115.3 s | ||

| pred | 99.67ms | 257.7ms | 39.27 s | step | 115.7ms | 57.66ms | 24.06 s | ||

| draw | 14.02ms | 44.24ms | 5.525 s | loss | 11.01ms | 5.196ms | 2.290 s | ||

| zero_grad | 2.799ms | 719.0µs | 1.103 s | draw | 10.51ms | 17.78ms | 2.187 s | ||

| loss | 2.143ms | 697.3µs | 844.2ms | zero_grad | 6.685ms | 1.352ms | 1.391 s | ||

| valid | batch | 127.8ms | 386.6ms | 20.95 s | valid | batch | 551.0ms | 249.1ms | 115.2 s |

| pred | 105.7ms | 378.7ms | 17.33 s | pred | 539.2ms | 245.4ms | 112.7 s | ||

| draw | 19.91ms | 71.68ms | 3.265 s | draw | 8.380ms | 16.78ms | 1.751 s | ||

| loss | 1.870ms | 1.307ms | 306.7ms | loss | 3.218ms | 1.468ms | 672.5ms | ||

Results measured with Simple Profiler Callback.

# Final Thoughts

Overall, I think SageMaker Studio Lab is a good competitor in the free machine learning compute space. Especially if one has been using the free tier of Colab and training models on K80s, then it’s almost a straight upgrade across the board.

For those not stuck using the free tier of Colab, SageMaker Studio Lab could be a useful addition to machine learning workflows as an augmentation to Kaggle or Colab Pro. The 17.4 to 32.1 percent faster training in mixed precision than Kaggle or Colab ProIf Colab Pro would assign Tesla T4s more often, then the mixed precision speed advantage SageMaker Studio Lab has over Colab Pro would start to evaporate. means less time waiting for models to train while iterating on an idea. Then once iteration has ended, move training to Kaggle or Colab Pro for longer runtime. If the dataset can fit into the 15GB of storage.

SageMaker Studio Lab is also a strong contender for those just starting out with deep learning due to both the faster training speedSageMaker Studio Lab guides will need to explicitly and repeatedly mention the necessity of training in mixed precision. and persistent storage, which means the environment needs only be set up once, allowing students to focus on learning and not continual package management.